Architecture Of Data Warehouse

If you are looking for architecture of data warehouse you come to the right place. We have images, pictures, photos, wallpapers, and more about that. In these page, we also have variety of images available. Such as png, jpg, animated gifs, pic art, logo, black and white, transparent, etc.

x

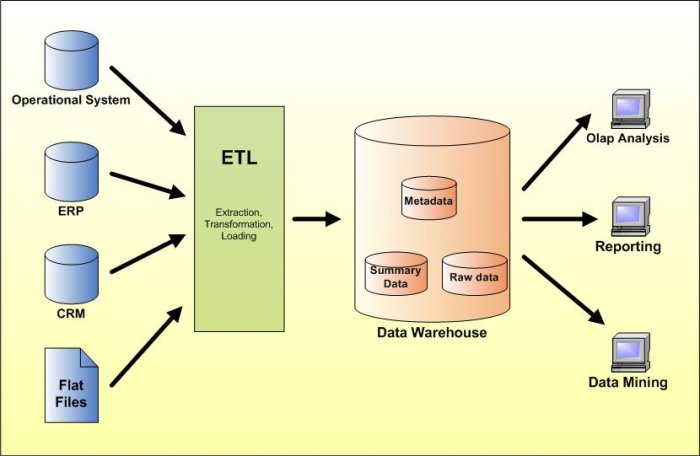

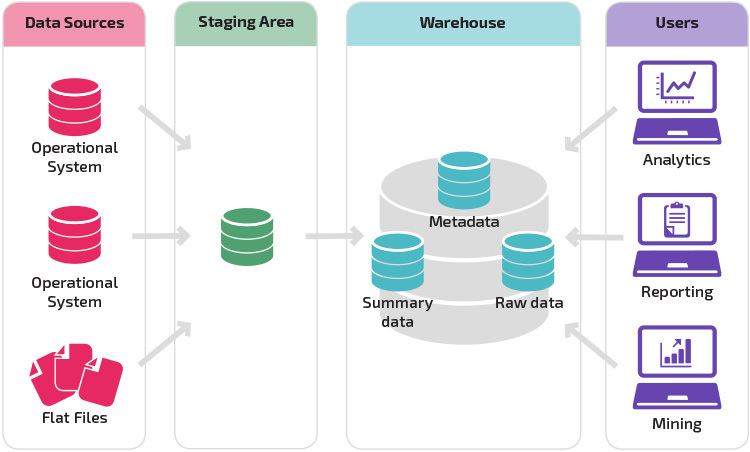

Informatica Tutorial: Data Warehouse Architecture

Data Warehouse Architecture